CoreWeave and NVIDIA Rubin are Redefining AI Infrastructure

Artificial intelligence infrastructure is moving into a new phase, one defined less by experimentation and more by dependable, production scale deployment. Against that backdrop, CoreWeave has confirmed plans to integrate the NVIDIA Rubin platform into its AI cloud, broadening the options available to customers building agentic AI, reasoning systems and large scale inference workloads. Expected to be among the first cloud providers to deploy NVIDIA Rubin in the second half of 2026, CoreWeave is positioning itself at the front of the next compute cycle as AI systems grow in complexity and scale.

The announcement reflects a deliberate strategy rather than a one off hardware refresh. CoreWeave’s cloud has been designed from the outset to operate across multiple generations of accelerated computing, allowing customers to align workloads with the most appropriate architecture as requirements evolve. By adding NVIDIA Rubin, the company expands the performance envelope available to enterprises, AI laboratories and fast growing startups running production workloads where reliability and predictability matter as much as raw speed.

Why NVIDIA Rubin Matters For Modern AI Workloads

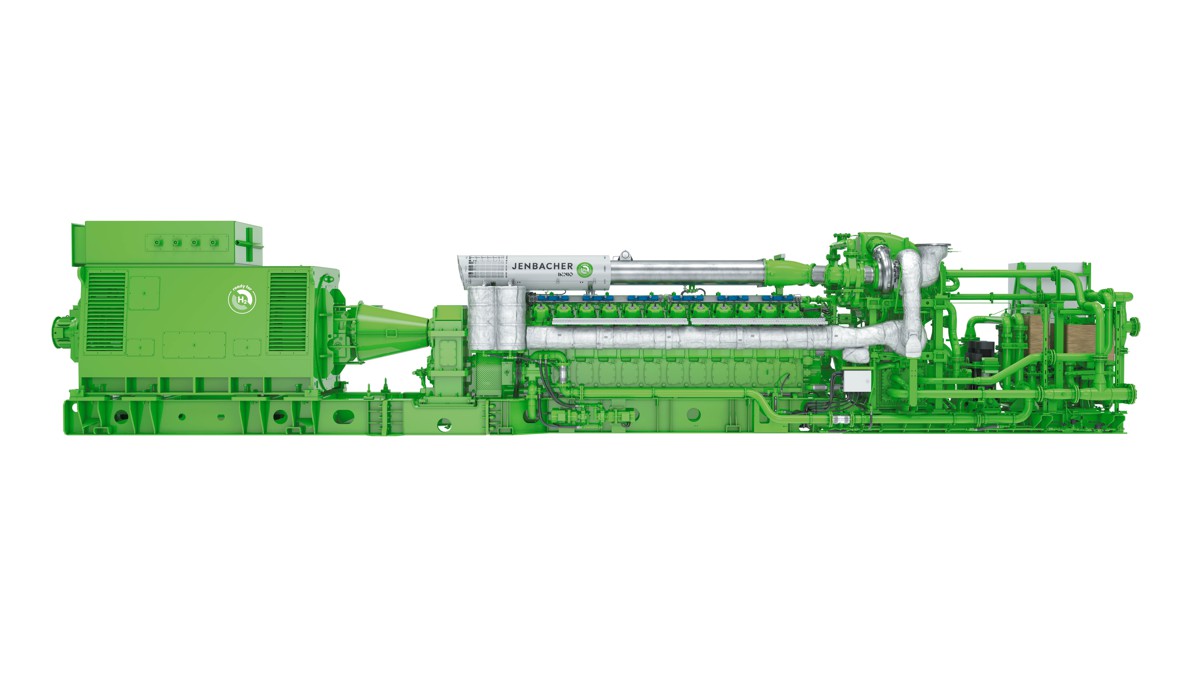

The NVIDIA Rubin platform has been developed to support a new class of demanding AI workloads. These include agentic AI systems capable of autonomous decision making, advanced reasoning models and large scale mixture of experts architectures that require sustained compute over long training and inference cycles. In practical terms, Rubin is designed for environments where downtime, variability or inefficiency quickly translate into real world costs.

Designed to support workloads such as drug discovery, genomic research, climate simulation and fusion energy modelling, Rubin provides the scale and efficiency needed to keep complex models running smoothly. On the CoreWeave platform, this translates into a more flexible environment for AI builders who need to train, serve and scale advanced systems without constantly re engineering their infrastructure as demands change.

Built For Choice At Production Scale

Choice has become a defining issue for organisations deploying AI at scale. As models grow larger and more specialised, the ability to select the right system for the right job can determine both performance and cost efficiency. CoreWeave’s approach centres on offering that flexibility without forcing customers to manage the underlying complexity themselves.

Michael Intrator, Co founder, Chairman and Chief Executive Officer of CoreWeave, framed the significance of Rubin in those terms: “The NVIDIA Rubin platform represents an important advancement as AI evolves toward more sophisticated reasoning and agentic use cases. Enterprises come to CoreWeave for real choice and the ability to run complex workloads reliably at production scale. With CoreWeave Mission Control as our operating standard, we can bring new technologies like Rubin to market quickly and enable our customers to deploy their innovations at scale with confidence.”

That emphasis on operational readiness underlines why the integration matters. The challenge is no longer accessing powerful hardware, but deploying it in a way that remains stable and observable as workloads scale.

NVIDIA And CoreWeave Shaping The AI Factories Of The Future

From NVIDIA’s perspective, Rubin marks a shift towards infrastructure designed specifically for reasoning and agentic AI. According to Jensen Huang, Founder and Chief Executive Officer of NVIDIA, partnerships with cloud specialists such as CoreWeave are essential to translating that capability into production reality: “CoreWeave’s speed, scale, and ingenuity make them an essential partner in this new era of computing. With Rubin, we’re pushing the boundaries of AI from reasoning to agentic AI and CoreWeave is helping turn that potential into production as one of the first to deploy it later this year. Together, we’re not just deploying infrastructure, we’re building the AI factories of the future.”

The phrase AI factories is more than rhetoric. It reflects an industry wide shift towards highly integrated environments where compute, networking, power and software orchestration operate as a unified system. Rubin is designed to slot into that model, while CoreWeave’s platform is built to run it at scale.

From Hardware To Operating Standard

A critical differentiator for CoreWeave lies in how new platforms are brought online. NVIDIA Rubin will be deployed using CoreWeave Mission Control, described as the industry’s first operating standard for training, inference and agentic AI workloads. Rather than treating infrastructure as a collection of discrete components, Mission Control unifies security, expert led operations and observability into a single operating framework.

Integrated with the NVIDIA Reliability, Availability and Serviceability Engine, Mission Control provides real time diagnostics across fleet, rack and cabinet levels. For customers, this means clear visibility into system health and schedulable production capacity, a requirement for organisations running AI systems that must operate continuously and predictably.

Engineering For Power, Cooling And Network Density

Modern AI platforms place intense demands on data centre infrastructure. High density compute requires tightly managed power delivery, advanced liquid cooling and low latency network integration. To address this, CoreWeave has developed its Rack Lifecycle Controller, a Kubernetes native orchestrator that treats an entire NVIDIA Vera Rubin NVL72 rack as a single programmable entity.

By coordinating provisioning, power operations and hardware validation at rack level, the system ensures production readiness before customer workloads are deployed. This approach reduces deployment risk and shortens the time between hardware installation and usable capacity, a factor that has become increasingly important as demand for AI compute continues to outpace supply.

A Track Record Of Rapid Deployment

CoreWeave’s credibility in this space is underpinned by its track record. The company was the first cloud provider to offer general availability of NVIDIA GB200 NVL72 instances and the NVIDIA Grace Blackwell Ultra NVL72 platform. These deployments demonstrated CoreWeave’s ability to move quickly without compromising performance or reliability.

Supporting that hardware capability is a custom built software stack optimised for AI workloads. Designed to accelerate deployment timelines while maintaining industry leading standards, the stack allows customers to focus on model development and deployment rather than infrastructure management. This philosophy carries through to the planned Rubin integration.

Industry Perspective On Production Scale AI

Independent analysts see the combination of Rubin and platforms like CoreWeave as a necessary step in translating AI potential into practical outcomes. Dan O’Brien, President and Chief Operating Officer at The Futurum Group, highlighted the operational dimension of advanced workloads: “Workloads like drug discovery, climate modeling, and advanced robotics demand both cutting edge compute and the ability to run it reliably at scale. The NVIDIA Rubin platform expands what is possible, and platforms like CoreWeave are what make those capabilities available in practice. That combination is what accelerates real progress.”

The emphasis on reliability echoes a broader industry consensus. As AI systems become embedded in research, industry and public sector applications, tolerance for instability diminishes rapidly.

Enabling Builders To Focus On Innovation

Once NVIDIA Rubin is fully integrated into the CoreWeave Cloud, customers will be able to concentrate on building advanced AI systems rather than managing infrastructure. By pairing Rubin’s agentic and reasoning capabilities with CoreWeave’s purpose built software stack, the platform supports large scale training, high performance inference and low latency agentic AI from a single environment.

This approach aligns with CoreWeave’s broader platform strategy, which aims to unify the essential tools required to run AI at production scale. High performance compute, multi cloud compatible data storage and the software layer required to develop, test and deploy AI systems are brought together under one operational model.

Extending The Platform With Advanced Capabilities

Recent innovations illustrate how CoreWeave continues to extend that foundation. Serverless RL, described as the first publicly available fully managed reinforcement learning capability, allows organisations to experiment with and deploy reinforcement learning workflows without building bespoke infrastructure. Combined with Rubin, such capabilities are likely to support increasingly sophisticated AI systems.

Performance metrics reinforce the company’s positioning. CoreWeave has achieved industry leading MLPerf benchmark results and remains the only AI cloud to earn top Platinum rankings in both SemiAnalysis ClusterMAX 1.0 and 2.0. These benchmarks provide third party validation of the platform’s ability to deliver advanced AI infrastructure with consistency and efficiency.

Building The Essential Cloud For AI

CoreWeave describes itself as The Essential Cloud for AI, built for pioneers by pioneers. Trusted by leading AI labs, startups and global enterprises, the company positions its platform as a force multiplier that combines infrastructure performance with deep technical expertise. The planned deployment of NVIDIA Rubin reinforces that identity, signalling a commitment to staying ahead of the curve as AI workloads continue to evolve.

Rather than chasing novelty, the focus remains on operational excellence, scalability and choice. As agentic AI and reasoning models move from research environments into production systems, that focus may prove decisive in determining which platforms shape the next chapter of AI driven innovation.