ASU’s Four-legged AI Robodog Champions a Caring Robotics Future

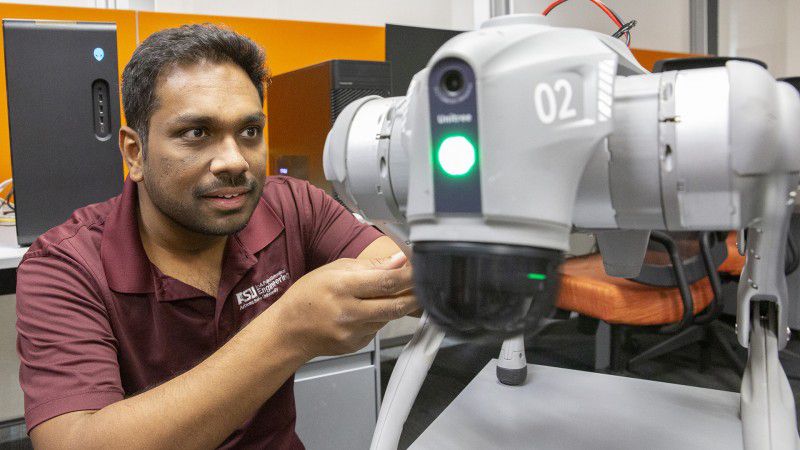

Arizona State University’s LENS Lab has unleashed a innovation with bite: the Unitree Go2 robotic dog. This nimble mechanical quadruped isn’t here to fetch – it’s engineered to save lives and make the world more accessible.

Outfitted with cutting-edge LiDAR, AI‑powered cameras, and a responsive voice interface, it’s learning to tread where humans hesitate: in search‑and‑rescue missions and as a guide for the visually impaired.

Robotics with Real‑World Purpose

Founded by Assistant Professor Ransalu Senanayake, the Laboratory for Learning Evaluation and Naturalization of Systems (LENS Lab) is on a mission. It’s not about abstract algorithms – it’s about forging tools that solve tangible, pressing problems.

We’re not just writing code for robots: instead, the team is crafting AI companions that can navigate dangerous environments and widen accessibility.

The lab pushes the envelope by equipping robots to perceive, reason, and adapt – transforming them from static machines into dynamic teammates.

Search‑and‑Rescue in a Canine Form

Take second-year master’s student Eren Sadıkoğlu, for example. His focus? Teaching the Unitree Go2 to navigate post‑disaster terrains using vision- and language‑based navigation powered by reinforcement learning.

This isn’t just about point‑A‑to‑B mobility – it’s about strategic, safe movement: jumping over rubble, ducking under debris, and executing agile manoeuvres.

Armed with RGB‑depth cameras and foot‑mounted touch sensors, the robodog can respond to unstable surfaces and unforeseen obstacles – venturing where human rescuers can’t, and keeping teams safer.

Guiding the Visually Impaired

Undergraduate student Riana Chatterjee is steering another exciting thread of research: using the Unitree Go2 as a guide for visually impaired users. Through YOLO, transformer-based depth estimation, and vision-language models (VLMs), the robot identifies objects, gauges distance, and articulates surroundings in real time.

My project is about combining deep learning technologies to enable the robot to understand its surroundings and communicate that to a visually impaired person, she says.

This could herald a future where robotic guide dogs step in where live animals are impractical – able to identify obstacles, map walkable paths, and deliver real‑time guidance.

Academic Rigour Meets Applied Innovation

Senanayake, visionary founder of LENS Lab, is blending theoretical insight with real-world impact. On the academic front, the lab focuses on making AI adaptable, explainable, and ethically robust – challenging conventional metrics of accuracy to ask: when do models fail, how transparent are they, can they adapt?

Meanwhile, the applied side, as demonstrated by the Go2 robodog, brings AI from the lab to the field – tackling hazards, enhancing human safety, and offering practical accessibility.

ASU’s leadership is backing it all. Ross Maciejewski, director of the School of Computing and Augmented Intelligence, underscores a curriculum that balances theory with real‑world projects: future problem‑solvers are being trained right now to deploy transformative solutions.

Insights from Recent Research

Recent studies reinforce – and expand – these efforts:

- A quiet, stable locomotion controller developed for the Unitree Go1 robot significantly reduced noise (by half) compared with standard motion controllers, improving user comfort – especially crucial for those relying on auditory cues.

- Research into aesthetic and functional design of robotic guide dogs shows that appearance matters: even for visually impaired users, societal perceptions, texture, and familiarity can influence acceptance – an important factor when developing technologies meant to integrate into daily life.

- User studies have highlighted key developer considerations: customizable control and communication styles, robust battery life, weather resilience, and intuitive interaction are non‑negotiable requirements for real-world adoption of guide‑dog robots.

Across Terrain, Across Needs

LENS Lab’s research could redefine what a service companion looks like – not with fur and paws, but circuits and sensors. These robotic dogs are already crossing boundaries: from rubble‑strewn disaster zones to bustling urban crosswalks, offering safety, guidance, and hope.

It’s a tangible chance to make AI not just smarter, but kinder.