Building Proof Into Intelligence as AI Moves Into Critical Infrastructure

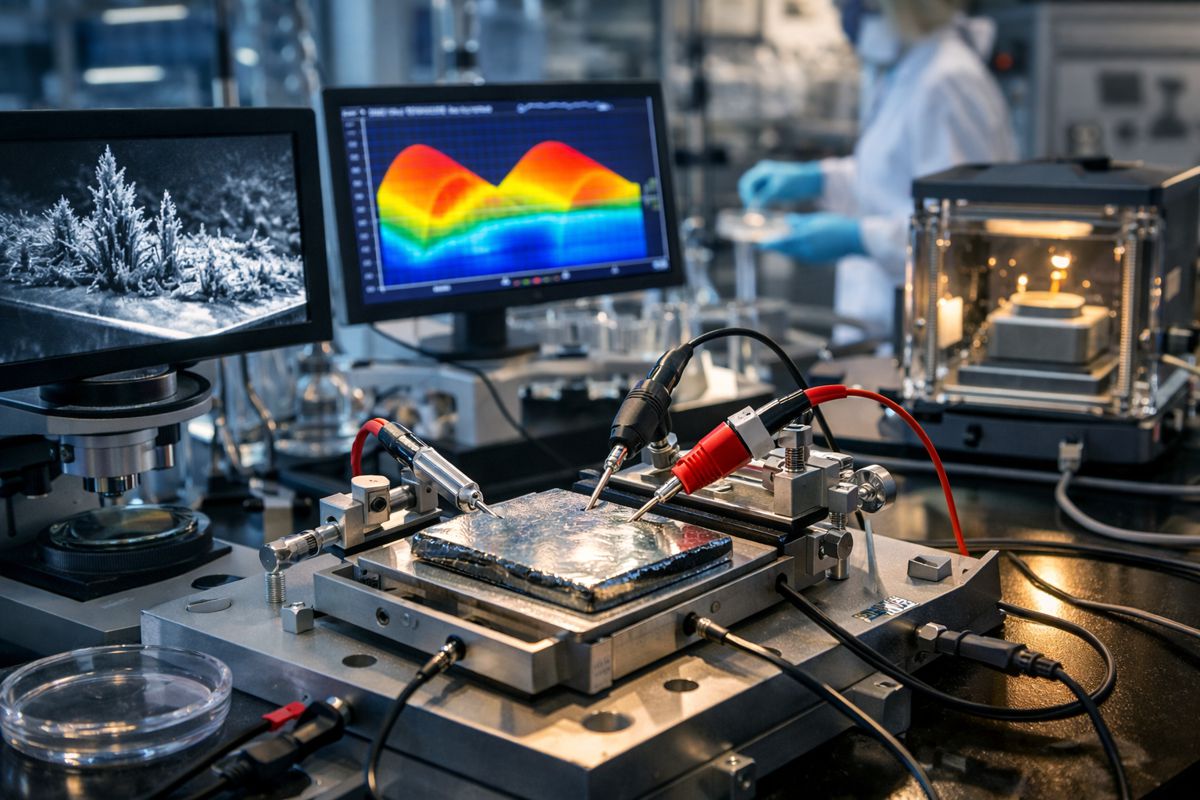

Midas has entered the public arena at a moment when artificial intelligence is no longer confined to experimental labs or consumer-facing software. AI systems are now being embedded deep inside infrastructure that underpins modern economies, from financial markets and industrial automation to defence systems, cloud platforms, and scientific research pipelines. In those environments, plausibility is not enough. Systems must be correct, demonstrably and consistently.

The company’s public launch follows a $10 million funding round led by Nova Global, marking an early vote of confidence in a proposition that challenges one of AI’s most fundamental assumptions. Rather than treating correctness as something to be tested after deployment, Midas is building mathematical proof directly into how AI systems reason, generate outputs, and interact with real-world systems.

This matters because AI adoption has outpaced the mechanisms needed to trust it. As models grow more capable and more autonomous, human oversight becomes increasingly impractical. In sectors where errors translate into financial loss, physical harm, or systemic risk, the absence of provable correctness is no longer a theoretical concern. It is a structural weakness.

Why Trust Has Become AI’s Defining Bottleneck

Artificial intelligence has reached an uncomfortable inflection point. Large-scale models now produce outputs that are fluent, confident, and often persuasive, yet their internal reasoning remains opaque. In construction, infrastructure planning, logistics optimisation, and industrial design, this creates a gap between what systems appear to know and what they can actually justify.

Across the wider technology ecosystem, researchers and regulators have begun to converge on the same conclusion. Explainability, monitoring, and post-hoc validation help, but they do not solve the core problem. Most modern AI systems are probabilistic by design. They generate likely answers, not provably correct ones. When those systems are tasked with optimising supply chains, controlling machinery, or supporting safety-critical decisions, likelihood is a fragile foundation.

Midas positions itself squarely at this fault line. Its approach reframes AI reliability not as a performance metric but as a mathematical property. By embedding formal verification into AI workflows, the company aims to replace confidence with evidence, and plausibility with proof.

A Proof-Native Approach to Artificial Intelligence

At the heart of Midas’ thesis is a simple but demanding idea. Intelligence deployed at scale should be held to the same evidentiary standards as the institutions it serves. Law relies on proof. Science advances through verification. Engineering demands specification and validation. AI, by contrast, has largely operated without a proof loop.

According to Midas, this absence has been tolerated because early AI systems were advisory rather than decisive. That distinction is eroding quickly. As AI systems are entrusted with more autonomy, the cost of unverified reasoning rises sharply. The company’s technology introduces mathematical evidence at the core of AI pipelines, verifying not only final outputs but also the reasoning and data dependencies that produce them.

This is not error checking at the end of a process. It is verification by construction. Reasoning is constrained from the outset, ensuring that outputs are admissible only if they satisfy formally defined correctness criteria. In effect, Midas is treating AI systems less like creative engines and more like components of critical infrastructure.

The Team Behind the Mathematics

Midas’ technical ambition is closely tied to the pedigree of its founding team. The company was formed by eleven medallists from the International Mathematical Olympiad and the International Olympiad in Informatics, competitions widely regarded as the most selective academic contests in mathematics and computer science. Participation alone is rare. Medallists represent the extreme tail of global technical talent.

The founding group brings experience from organisations where correctness is already non-negotiable, including Jane Street, Google, AWS, NVIDIA, and Mercor. Their academic backgrounds span institutions such as Stanford, MIT, Cambridge, Princeton, and Duke, reflecting deep exposure to both theoretical computer science and real-world system design.

This combination matters because formal verification has historically struggled to escape academia. Translating proofs into production-grade systems requires not only mathematical sophistication but also an understanding of industrial constraints, performance trade-offs, and operational risk. Midas’ leadership argues that this blend is precisely what allows verification to scale beyond niche applications.

From Probabilistic Outputs to Mathematical Guarantees

Shalim Monteagudo-Contreras, President and Co-Founder of Midas, frames the problem in stark terms. Modern AI systems can generate convincing answers, but they cannot prove those answers are correct. In environments where failure carries material consequences, that limitation becomes untenable.

“Modern AI produces fluent, convincing answers, but it cannot prove they are correct,” he said. “Midas is building the barrier between probabilistic outputs and real-world systems. We enforce correctness mathematically, so results are not inferred, argued, or hoped for, but proven before they are allowed through.”

The distinction he draws goes beyond accuracy metrics or benchmark scores. Fluency, he argues, is not auditable. Proof is. By requiring AI systems to satisfy formal constraints before outputs are accepted, Midas aims to create a hard boundary between exploratory computation and operational decision-making.

Evidence as the Missing Layer in AI Systems

Renzo Balcazar, CEO and Co-Founder of Midas, extends this argument by placing AI in historical context. Human institutions evolved around evidence because trust does not scale without it. From financial accounting to engineering standards, proof is the mechanism that allows complex systems to function reliably.

“Every human institution, from law to science to finance, runs on evidence. Artificial intelligence is the first form of intelligence that operates without it,” he said.

As AI systems increasingly operate faster than humans can meaningfully review, the risk of mistaking coherence for correctness grows. In infrastructure planning, logistics coordination, and industrial optimisation, errors may propagate long before they are detected. Midas’ thesis is that evidence must be generated alongside intelligence, not retrofitted after the fact.

Scaling Verification as Systems Grow More Complex

One of the persistent challenges in AI governance is scale. Manual review does not scale. Sampling does not scale. Human-in-the-loop oversight becomes impractical as systems become more autonomous and interconnected. This is where Midas’ approach diverges from traditional safety mechanisms.

Rodrigo Porto, Tech Lead at Midas, argues that trust emerges only when verification is intrinsic to system design. Verifying reasoning from the start, rather than checking errors at the end, allows systems to grow in complexity without losing reliability. Mathematical verification, in this sense, acts as an internal governor.

By verifying data, reasoning paths, and outputs simultaneously, Midas aims to create AI systems that can be deployed in environments where mistakes are not an option. This includes sectors such as biotech, defence, hardware design, financial systems, and the underlying cloud infrastructure that increasingly supports construction and industrial technology platforms.

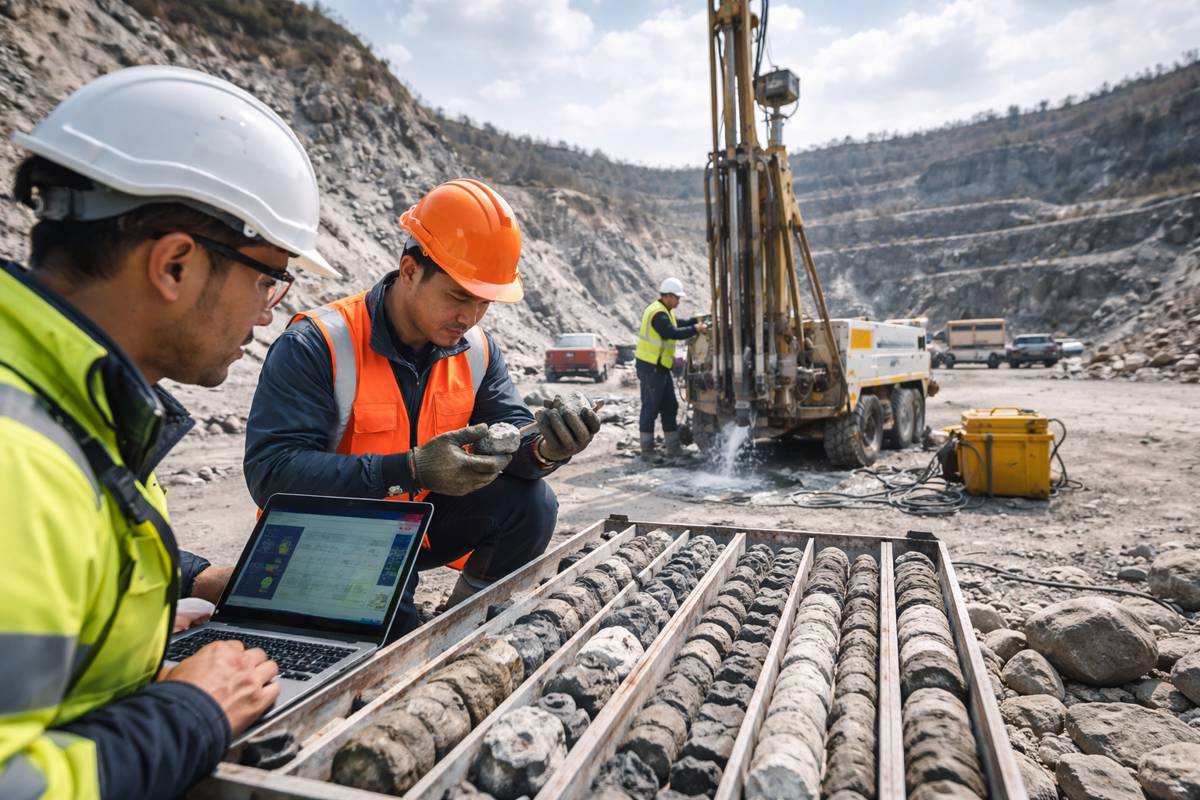

A Critical Building Block for Construction and Infrastructure

For the global construction and infrastructure ecosystem, the implications are significant. AI is already being used to optimise project schedules, assess risk, model structural behaviour, and manage supply chains. As these tools become more autonomous, the tolerance for error narrows sharply.

Infrastructure projects operate on long timelines and thin margins. Errors made early can cascade into delays, cost overruns, and safety risks years later. In this context, AI systems that can prove the correctness of their reasoning offer a fundamentally different risk profile. They shift AI from an advisory role into a dependable component of decision-making.

Midas’ work aligns with a broader industry trend towards digital twins, verified simulations, and data-driven assurance in infrastructure planning. Mathematical verification provides a foundation for trusting these systems not because they perform well on average, but because they meet explicit, provable criteria.

Funding as a Signal of Strategic Intent

The $10 million funding round led by Nova Global is notable not only for its size but for what it represents. The firm’s investors include individuals with direct experience building and scaling some of the most consequential technology companies in the world. Their involvement signals confidence that proof-native AI addresses a structural gap rather than a transient market trend.

Carlo Agostinelli, founder of Nova Global, emphasised the long-term nature of the challenge Midas is tackling. “At Nova Global, we focus on backing founders with the potential to become historical figures,” he said. “Shalim Monteagudo-Contreras and Renzo Balcazar are already operating at that level. They’ve built a world-class team from scratch and are taking on one of the most fundamental challenges in AI: trust. Their proof-native approach to ensuring AI reliability demonstrates both the technical ambition and founder-market fit is what turns Midas into a generational company.”

The funding will be used to translate formal verification research into production-grade infrastructure, bridging a gap that has limited adoption in the past.

From Research to Operational Baseline

Midas is explicit that it is not launching a conventional product cycle. Its leadership describes the company as a structural correction to how AI systems are built and deployed. In domains where correctness is essential, proof is not an enhancement. It is the baseline.

This framing resonates with sectors that already operate under rigorous standards, including civil engineering, industrial manufacturing, and regulated infrastructure. As AI becomes embedded deeper into these systems, the demand for mathematically grounded assurance is likely to grow.

Rather than asking organisations to trust AI because it performs well, Midas is asking a different question entirely. Can intelligence be required to prove itself before it acts? For industries where the cost of failure is measured in more than lost productivity, that question may soon become unavoidable.