Arbe and NVIDIA Advancing Ultra-HD Radar for Eyes-Off Driving at CES

Autonomous driving has never been short on ambition, but delivering safe, reliable and affordable hands-free and eyes-off capability remains one of the industry’s hardest challenges. Against that backdrop, Arbe Robotics Ltd has taken a decisive step forward. The company has announced a collaboration that combines its ultra-high-definition perception radar with accelerated computing from NVIDIA, forming a new platform designed to underpin AI-based perception at true highway speeds.

The significance of the announcement lies not only in the technology itself, but in its readiness. For the first time, Arbe will publicly demonstrate an automotive-grade radar system that is prepared for integration into complete perception stacks. The showcase will take place at CES 2026, signalling that this is no longer a laboratory concept, but a solution edging towards large-scale deployment.

A Radar Platform Built for AI-Driven Perception

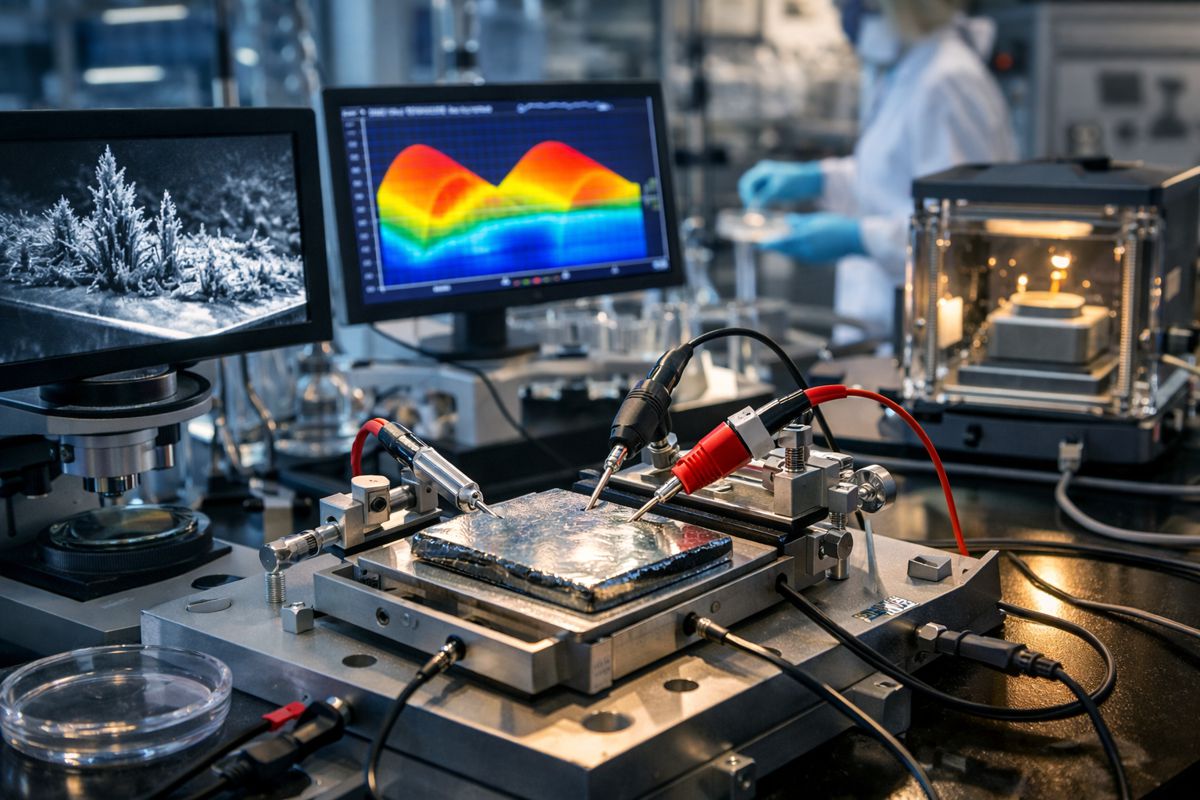

At the heart of Arbe’s approach is a radar architecture designed from the ground up for AI workloads. The company’s automotive-grade sensor produces a raw point cloud exceeding 20,000 detections per frame, generated through a channel array of 2,304 channels. This detection density far exceeds that of conventional automotive radar and creates a rich data foundation on which advanced perception and decision-making algorithms can operate.

Unlike legacy radar systems that focus on sparse object detection, Arbe’s platform delivers four-dimensional imaging, capturing range, azimuth, elevation and velocity simultaneously. That depth of information allows perception software to identify, classify and track objects with far greater accuracy. When paired with NVIDIA’s in-vehicle accelerated computing, the result is a perception pipeline capable of processing complex highway scenarios in real time without compromising cost or scalability.

Democratizing Advanced Autonomous Technology

Cost has long been a limiting factor in the deployment of sophisticated autonomous systems. High-resolution perception has often relied on expensive sensor suites and heavyweight computing platforms, restricting adoption to premium vehicle segments or tightly controlled pilot programmes. Arbe’s strategy challenges that model by combining affordability with performance.

By aligning its high-performance radar with NVIDIA’s automotive computing ecosystem, Arbe is positioning advanced perception as a viable option for a broader range of manufacturers. The collaboration aims to reduce overall system costs while improving redundancy and reliability, an equation that resonates strongly with automakers navigating tightening safety regulations and competitive pressure to deliver advanced driver assistance and autonomy at scale.

Executive Perspective on a Shifting Landscape

The ambition behind the collaboration is clear from the company’s leadership. Kobi Marenko, co-founder and Chief Executive Officer of Arbe, framed the initiative as a step change for the industry: “This initiative allows us to create a new benchmark for perception in all conditions. The collaboration accelerates the adoption of safer, more reliable, and more affordable autonomous driving solutions for automakers worldwide.”

He added that the live demonstrations at CES will focus on real-world performance rather than theoretical capability: “At CES, we are demonstrating our ultra-HD radar in action and how it navigates complicated highway scenarios at true highway speeds.” The emphasis on operational realism reflects growing industry scrutiny of autonomous claims and a demand for measurable, repeatable results.

Why Long-Range Accuracy Matters at Highway Speeds

Eyes-off driving on motorways introduces a non-negotiable requirement for long-range perception. A vehicle travelling at up to 130 kilometres per hour needs sufficient forward visibility to respond smoothly and predictably to hazards. Detecting vehicles, debris or stationary obstacles at distances approaching 300 metres is essential if braking, lane changes or evasive manoeuvres are to be executed without sudden or disruptive behaviour.

Arbe’s ultra-HD radar has been engineered to meet that requirement. The system delivers point cloud detections beyond 300 metres while maintaining the resolution and dynamic range needed to separate closely spaced objects. This capability supports smoother, more human-like driving behaviour, reducing abrupt interventions and improving passenger comfort, an often overlooked but critical factor in consumer acceptance.

Reliable Perception in All Conditions

One of radar’s enduring strengths is its resilience in adverse weather and low-visibility environments. Snow, sleet, heavy rain and fog can degrade camera and LiDAR performance, creating blind spots precisely when reliable perception is most needed. Arbe’s radar solution is designed to provide consistent output across all environmental conditions, maintaining dependable operation regardless of lighting or weather.

When combined with NVIDIA’s accelerated computing, this robustness translates into predictable system behaviour. Consistency is central to building trust, both for regulators assessing safety cases and for drivers expected to hand over control. By delivering stable perception at highway speeds, the platform supports eyes-off capability that behaves in a manner drivers can intuitively understand and anticipate.

Live Demonstrations at CES 2026

Arbe will reinforce its technical claims with live demonstrations at CES 2026, located at Booth 4551 in the West Hall of the Las Vegas Convention Center. These demonstrations are designed to show how the radar platform performs within realistic perception stacks rather than isolated test environments.

Key demonstrations include:

- AI-Based Occupancy Grid: In collaboration with Perciv AI, Arbe will present an AI-driven occupancy grid that generates a detailed spatial map around the vehicle, highlighting occupied and free space for advanced planning and control functions.

- LiDAR-Like Performance Using HD Radar: Arbe will illustrate how its high-definition radar can deliver performance comparable to LiDAR, enabling reductions in overall sensor suite cost while strengthening redundancy.

- Processing on NVIDIA DRIVE AGX Orin: High-resolution radar data will be processed on the NVIDIA DRIVE AGX Orin platform, demonstrating real-time perception using production-ready in-vehicle hardware.

Redefining Radar’s Role in Autonomous Systems

Arbe’s broader vision extends beyond a single product cycle. The company positions its radar chipset as a foundational technology capable of scaling from advanced driver assistance systems to hands-free, eyes-off driving and ultimately full vehicle autonomy. By delivering up to 100 times more detail than traditional radar systems, the platform addresses critical use cases such as free-space mapping in both highway and urban environments.

This scalability has implications across passenger, commercial and industrial vehicle segments. From long-haul trucks requiring reliable perception in all weather, to industrial vehicles operating in dusty or poorly lit environments, ultra-high-resolution radar offers a level of versatility that complements and, in some cases, substitutes other sensing modalities.

A Step Toward Trustworthy Autonomy

The path to widespread autonomous adoption is paved as much with trust as with technology. Systems must not only work, but work consistently, transparently and affordably. By combining ultra-HD radar with accelerated computing, Arbe and NVIDIA are addressing several of the industry’s most persistent barriers in one move.

As live demonstrations at CES 2026 put the technology under scrutiny, the collaboration will be closely watched by automakers, suppliers and policymakers alike. If the platform delivers on its promise, it may mark a turning point in how radar is perceived, no longer as a supplementary sensor, but as a central pillar of next-generation autonomous mobility.