Understanding Spatial Intelligence and Robot Spatial Awareness

Machines may excel at calculations, repetitive tasks and data processing, yet they still struggle with one of the most fundamental elements of human awareness: interpreting the world through sight. Humans subconsciously track spatial relationships, gauge distances and rely on constant sensory cues to navigate their surroundings. Robots, on the other hand, depend entirely on the quality of the data used to train them. When that data lacks depth or nuance, even the most advanced systems are left guessing.

Researchers have long attempted to improve robotic visual perception, but most training models rely on two-dimensional images or incomplete spatial references. This limitation makes it difficult for robots to understand where an object sits in space, how it relates to neighbouring items or how its placement might affect an interaction. Without deep spatial comprehension, robots can easily misinterpret instructions, misplace objects or misjudge distances.

The need for high-quality spatial reasoning grows more urgent as autonomous systems take on increasingly complex tasks across manufacturing, logistics, healthcare and assistive robotics. As Luke Song, lead author of a recent study from The Ohio State University, put it: “To have true general-purpose foundation models, a robot needs to understand the 3D world around it: So spatial understanding is one of the most crucial capabilities for it.”

Introducing The RoboSpatial Framework

In response to these challenges, researchers have developed RoboSpatial, a training dataset designed specifically to enhance three-dimensional reasoning in robotics. Presented at the Conference on Computer Vision and Pattern Recognition, the dataset represents a significant leap forward in machine perception.

RoboSpatial contains over one million real-world indoor and tabletop images, thousands of high-resolution 3D scans and roughly three million spatial labels. Each scene is captured both as an egocentric 2D image and a full 3D scan, enabling the model to learn how flat imagery corresponds to actual geometry. This dual approach mirrors the way humans interpret depth cues by combining perspective, context and prior experience.

Unlike traditional datasets that simply capture where objects appear, RoboSpatial maps their relationships within a scene. This includes how far they sit from one another, whether one item blocks another and how each object might move within a confined space. According to the research team, this realistic grounding allows robots to reason more accurately during physical manipulation tasks.

Spatial Context

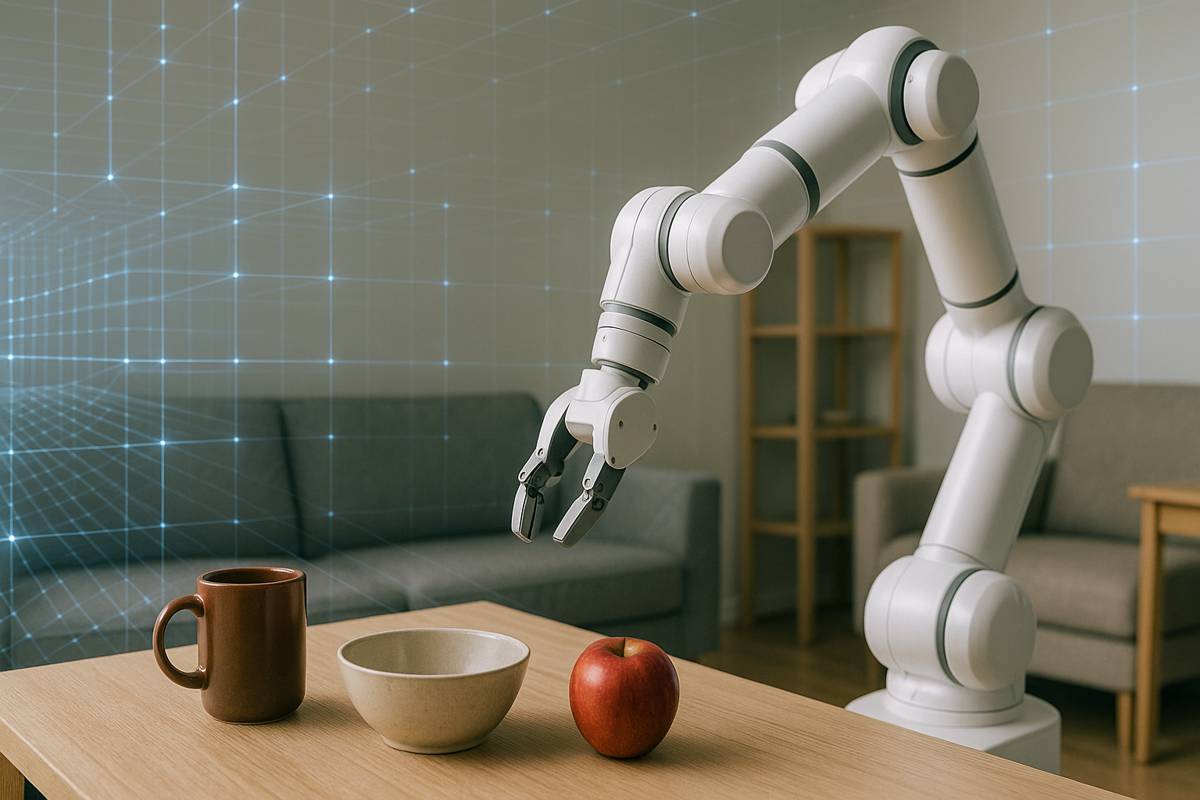

For most existing models, recognising a “bowl on a table” is relatively straightforward. Identifying the exact position of the bowl, determining whether it needs moving or understanding how it fits among other items, becomes much more difficult. These shortcomings often lead to clumsy movements or errors in practical situations.

RoboSpatial addresses this shortfall by testing spatial reasoning across a range of increasingly complex scenarios. For example, the model is required to rearrange objects on a tabletop, manage obstacles or interpret new configurations that differ from its original training data.

Song commented on the impact of this approach: “Not only does this mean improvements on individual actions like picking up and placing things, but also leads to robots interacting more naturally with humans.” This natural interaction is particularly relevant in applications where human safety or comfort is a priority.

Testing The Framework In Real-World Conditions

To evaluate the platform’s capabilities, researchers applied RoboSpatial to several robotic systems, including the Kinova Jaco, an assistive arm widely used by individuals with disabilities. The robot was trained to answer close-ended spatial questions such as “can the chair be placed in front of the table?” or “is the mug to the left of the laptop?”

Performance significantly exceeded previous benchmarks. Robots trained on RoboSpatial not only demonstrated improved accuracy in object placement but also showed an ability to generalise beyond their training conditions. This indicates that the system is learning broader spatial concepts rather than memorising specific layouts.

Such improvements could support a wide range of practical applications. In warehouse automation, enhanced perception would allow robots to pack items more efficiently. In construction robotics, understanding depth and spatial alignment could improve precision in surveying, inspection and material handling. Assistive robots may offer more fluid movements when helping users with daily tasks.

Implications For Robotics And AI

Robotic autonomy relies heavily on context. Without an accurate understanding of how objects and people move through environments, even the most advanced AI can struggle to perform reliably. Misjudging distances or misplacing objects can create hazards in sensitive environments such as healthcare, industrial plants or construction sites.

Song emphasised the wider significance: “These promising results reveal that normalising spatial context by improving robotic perception could lead to safer and more reliable AI systems.”

The research team suggests that RoboSpatial could function as a foundational resource for future robotic models. Its emphasis on depth, positioning and relational understanding makes it suitable for applications extending well beyond household tasks. Emerging fields such as aerial robotics, autonomous vehicles and collaborative industrial robots all require the level of robust spatial reasoning this dataset aims to deliver.

Wider Research

Recent developments in spatial AI stand alongside a surge of interest in embodied intelligence systems. Research groups at MIT, Carnegie Mellon University and ETH Zurich are exploring how AI models can learn to navigate complex spaces by blending perception with reinforcement learning. Concurrent advances in 3D scene reconstruction, such as NVIDIA’s Neural Radiance Fields (NeRF) and Meta’s work on large-scale 3D mapping, are pushing robotic vision even further.

These developments collectively point to a broader shift: robots are beginning to understand not just what objects are, but how they behave in real environments. Integrating this capability with high-quality datasets like RoboSpatial could accelerate progress across multiple domains.

The involvement of institutions including The Ohio State University and NVIDIA demonstrates strong industry–academia collaboration, while support from the Ohio Supercomputer Center underscores the computational scale required for such projects.

The Next Wave Of Capabilities

While robots still lag behind humans in intuitive understanding, RoboSpatial marks a major step toward bridging this gap. By exposing models to robust, realistic spatial cues, researchers are equipping machines with the knowledge they need to operate more naturally and confidently.

Song noted his optimism for the future: “I think we will see a lot of big improvements and cool capabilities for robots in the next five to ten years.” If current momentum continues, the next generation of robotic systems may navigate workshops, laboratories and homes with far greater precision than today’s counterparts.

Strengthening Spatial Understanding For Real-World Impact

The development of RoboSpatial signals a transformative moment for spatial AI. Its detailed mapping of indoor environments and rich annotation of spatial relationships offer a new foundation for robots that need to move, interact and adapt in dynamic settings. As industries increasingly embrace automation, the ability for machines to handle spatial tasks safely and intelligently will become essential.

Researchers acknowledge that more work lies ahead, yet the early success of RoboSpatial provides strong evidence that spatially aware robots are well within reach. With continued innovation, the field is poised to unlock breakthroughs that could reshape how humans and machines collaborate across countless sectors.